Transformers vs Mixture of Experts: A Detailed Comparison

Explore the differences between Transformers and MoE models regarding performance and architecture.

Understanding the Efficiency of MoE Models

Question:

MoE models contain far more parameters than Transformers, yet they can run faster at inference. How is that possible?

Difference between Transformers & Mixture of Experts (MoE)

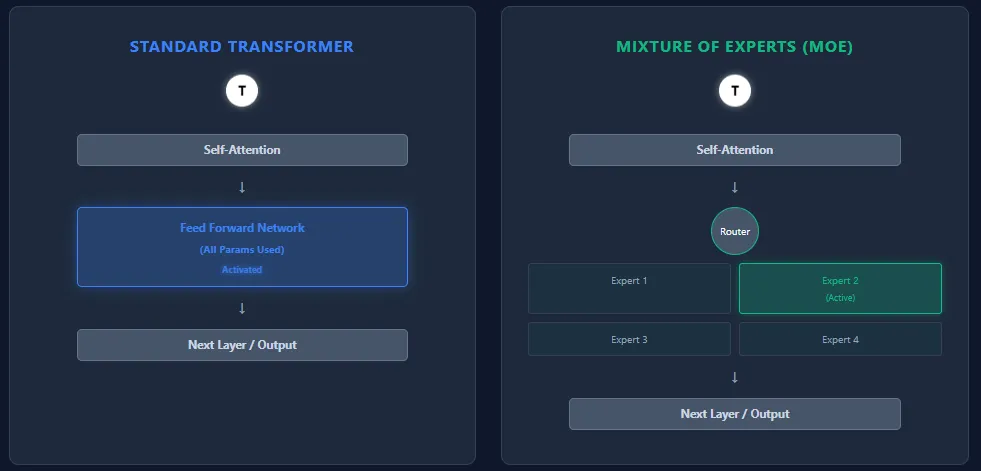

Transformers and Mixture of Experts (MoE) models share a common backbone architecture—self-attention layers followed by feed-forward layers—but they implement parameters and computations differently.

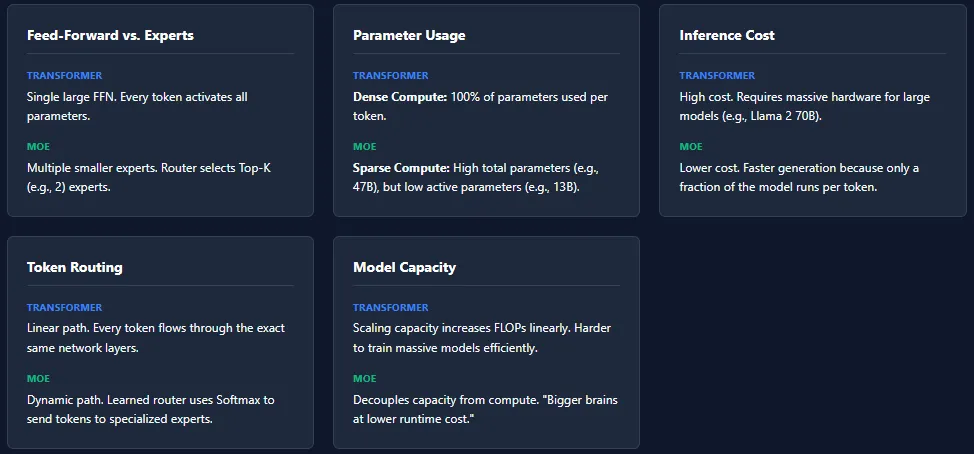

Feed-Forward Network vs Experts

- Transformer: A single large feed-forward network (FFN) is present in each block, which means every token activates all parameters during inference.

- MoE: Utilizes multiple smaller feed-forward networks, termed experts. A routing network selectively activates only a few experts (Top-K) per token, keeping the majority of parameters inactive.

Parameter Usage

- Transformer: Uses all parameters across layers for every token, resulting in dense computing.

- MoE: Contains a larger number of total parameters but activates only a small portion for each token, resulting in sparse computing. For example, Mixtral 8×7B has 46.7B parameters but utilizes around 13B per token.

Inference Cost

- Transformer: High inference costs due to the full activation of parameters. Models like GPT-4 or Llama 2 70B require substantial computational resources.

- MoE: Benefits from lower inference costs since only a few experts are active per layer, allowing faster and more economical performance, particularly at scale.

Token Routing

- Transformer: Lacks routing; every token traverses the same path through all layers.

- MoE: Employs a learned routing mechanism that designates tokens to experts based on softmax scores. This method increases specialization and model capacity as different experts may be activated for various tokens.

Model Capacity

- Transformer: Scaling capacity requires adding layers or expanding the FFN, which significantly increases FLOPs.

- MoE: Can expand total parameters immensely without adding to per-token computational cost, enabling larger models with lower runtime expenses.

While MoE architectures promise huge capacity and reduced inference costs, they also face training challenges, with expert collapse being one of the most notable. This occurs when the router consistently selects the same experts, leading to under-training of others.

Load imbalance presents another issue, where some experts receive disproportionately more tokens, causing uneven training. To mitigate this, MoE models utilize techniques such as noise injection, Top-K masking, and expert capacity limits.

These strategies ensure that all experts remain engaged and balanced in their learning processes, although they contribute to the complexity of training compared to standard Transformers.

Сменить язык

Читать эту статью на русском